- Blog

- Audio Events – a step into understanding more than...

Apr 25, 2024 | Read time 7 min

Audio Events – a step into understanding more than words

Discover how Audio Events can transform your content analysis, media analytics and accessibility efforts.

We’re launching Audio Events, a way to identify non-speech sounds in media, all using AI.

We believe this represents an important and profound goal and lays the groundwork for some vital technical breakthroughs. This will pave the way for seamless interaction with AI and beyond. For media companies, the value of this might be self-evident, but for others, this opens the door to unexplored value.

It's only words?

When interacting face to face, conversation is inherently ‘multi-modal’ – that is, the combination of words, tone, intonation, context, and non-verbal cues all come together to express intended meaning.

Let’s use an example to illustrate this.

Daisy isn’t driving to London tomorrow.

Take this simple sentence. Six words, none of which are long, or complicated, all easy to understand in isolation. But what does this sentence mean?

If you think the answer to that is simple, think again.

Now imagine someone was saying this sentence out loud, emphasizing a different word each time. The meaning of the sentence (at least as heard by another human) is changed each time.

Daisy isn’t driving to London tomorrow.

Someone else is.

Daisy isn’t driving to London tomorrow.

The trip has been cancelled.

Daisy isn’t driving to London tomorrow.

She’s getting the train instead.

Daisy isn’t driving to London tomorrow.

She’s actually returning from London.

Daisy isn’t driving to London tomorrow.

She is going to Cambridge instead.

Daisy isn’t driving to London tomorrow.

She is going the day after instead.

Six words, six meanings, all different.

But without the emphasis, the sentence remains ambiguous in the written form without any additional sentences and words to explain it further. Those second sentences above add vital additional information, and without them, meaning is lost (or at least remains open to interpretation).

Now let’s add additional complexity to the sentence. Let’s take this version of the above:

Daisy isn’t driving to London tomorrow.

Now let’s imagine a situation where this is being communicated by one friend to another.

In this scenario, the friend knows that Daisy has in fact hired a jetpack to fly herself to London.

As they say the above, their eyes widen with excited glee, because they know that the reality of Daisy’s journey is far more exciting that just getting in a car. Even before the friend reveals the true method of transportation, anyone listening would be able to sense that something was afoot.

The above gets even more complicated in media.

Here’s a great YouTube video that shows just how important music is in conveying the intended meaning behind a scene.

The meaning of the scene can be completely changed even when nothing changes, but the music accompanying it does.

Similar cues apply in other media.

There’s a reason sitcoms like Friends have live audiences and laugh tracks.

Watch this clip of Friends without any laughter - it's painful.

Applause can serve a similar function. They indicate the meaning of intended interpretation of the words spoken – without them, this meaning is lost or obscured.

What does this mean for our technology and mission?

True understanding requires more than speech-to-text

Our goal is one of understanding.

This is deliberately stronger than merely transcribing. Writing down the words spoken is, of course, a vital and valuable process, but it is not the end point.

We want to capture and harness as much meaning as possible from the spoken word and the media that contains speech. Given the above examples, we clearly cannot stop with simply writing down the words uttered with exceptional accuracy. We need to be able to gather all the richness of information contained within audio, and aim for true understanding.

Why?

Well, above this being an interesting challenge and a noble goal in itself, there are three reasons:

1) Technology that understands us

Technology, and in particular AI, needs to be able to understand what we want. With LLMs and the rise of more conversational technology, it's vital that we are able to communicate in our ‘highest bandwidth’ mode, and that is speech. This is our default way of communicating ideas, wants, desires, needs. In order for our technology to be as helpful as possible, it too needs to be able to understand and interpret what we’re asking of it.

2) Technology to help us understand each other

Technology that understands everyone can also be used to help us understand each other. Global language barriers aren’t going anywhere, and if technology can understand what one person is saying, then it can help convey this meaning to others. Different languages may always exist, but they need not be a barrier in the future.

3) Speech as a source of value

A huge amount of collective knowledge is never written down. Since we talk by default to communicate, much insight and understanding may never make the page. Even if it does, a lot of that context and meaning is lost by only writing down the words uttered. With deep understanding and record keeping of conversations, a brand new, unlimited and growing pool of insight and analytics can be unlocked.

All three reasons are worthy aims.

But where does one start with this?

Well, we already launched Sentiment that begins to understand positive, neutral and negative statements in speech, and now we are launching Audio Events.

Audio Events

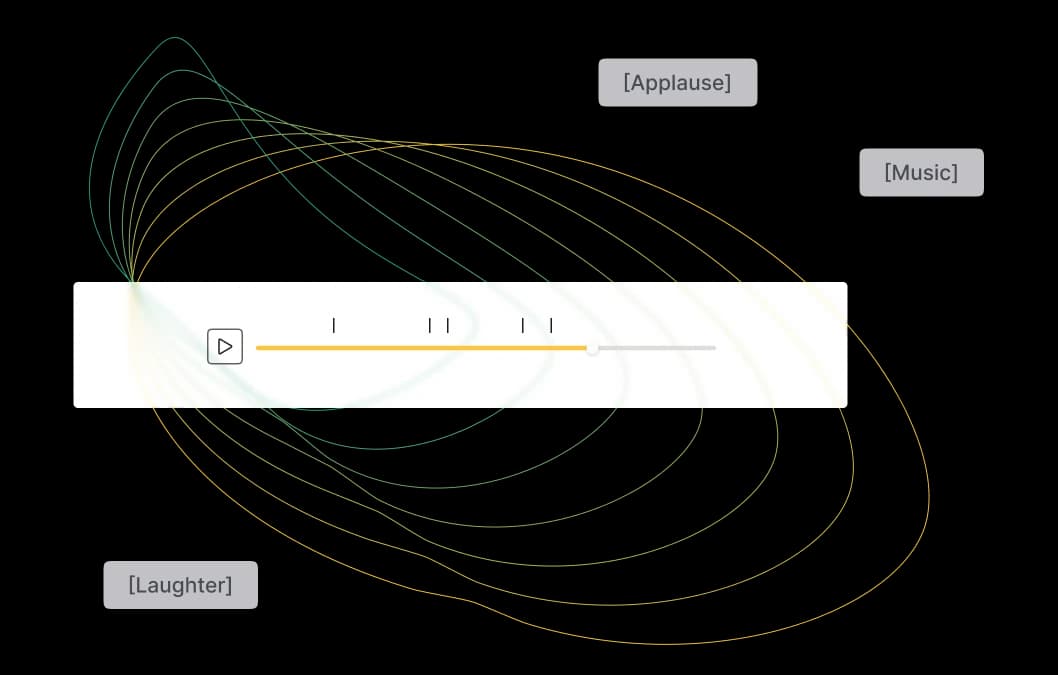

By extending our ASR capabilities to include non-speech sound detection, we are opening new possibilities for media analytics and accessibility.

This feature can detect a variety of sounds that traditional speech recognition technologies might miss, such as music, laughter, and applause, enhancing both the accuracy and richness of media content.

Utilizing advanced AI, the Audio Events feature analyzes audio and video content to identify and label specific non-speech sounds. This capability is integrated seamlessly into our existing ASR API and is available for early access testing.

[Music] to our ears – the many benefits of audio events

The reliable identification of these events offer a number of benefits across industries:

Enhanced Media Accessibility

By providing more detailed captions that include non-speech sounds, content becomes more accessible to the deaf and hard of hearing community, fulfilling both legal and ethical standards for inclusivity.

Improved Content Analysis

Media companies can gain deeper insights into their content, understanding not just what is said, but also the context and emotional reactions conveyed through other sounds.

Efficiency in Operations

For industries such as EdTech and CCaaS, this feature helps streamline operations by automating the detection and captioning of audio cues, reducing manual labor and associated costs.

While primarily designed for the media sector, the Audio Events feature is valuable for educational technology as it enhances e-learning platforms with richer and more interactive audio descriptions.

Audio Events is hugely valuable today. It can provide additional captions to the 99% of content that does not have any audio descriptions, but also represents a bolder long term aim.

A direction of travel and an opportunity

The launch of the Audio Events feature is just the beginning.

We are committed to continuous innovation and are already exploring further enhancements, including the ability to identify more complex sound environments and integrate additional audio classifications.

For example, in the video below we’ve created a proof of concept, leveraging the power of LLMs to be able to provide more descriptive audio captions to any media.

All captioning in this video is AI generated, and whilst not perfect, you can see how descriptive and useful the captions are:

This opens new possibilities in creating more immersive and rich media experiences that are inclusive and accessible. Because these can be AI-generated, they are open to all. Every single media production and content company can add these kinds of captions to their work, and ensure their stories are engaging for the widest possible audience.

At Speechmatics, we believe in the power of understanding audio – that’s more than simply transcription. With the Audio Events feature, we are making strides toward more inclusive, accurate, and insightful audio-visual media experiences. We invite you to join us on this exciting journey and see what new dimensions of understanding your media content can reach.

Discover how the Audio Events feature can transform your media analytics and accessibility efforts.

Contact our sales team for a demo and see the future of ASR in action today. If you’re interested in adding additional audio events to our roster, please get in touch.

Related Articles

- CompanyApr 10, 2024

Our visuals have changed, but our mission has not.

Lauren KingChief Marketing Officer - Use CasesJan 30, 2024

Real-time speech technology: Elevating communication with high-value use cases

Stuart WoodProduct Manager - ProductJan 30, 2024

The transformative advantages of real-time speech technology

Stuart WoodProduct Manager