- Blog

- GPT-4: How does it work?

Mar 15, 2023 | Read time 7 min

GPT-4: How does it work?

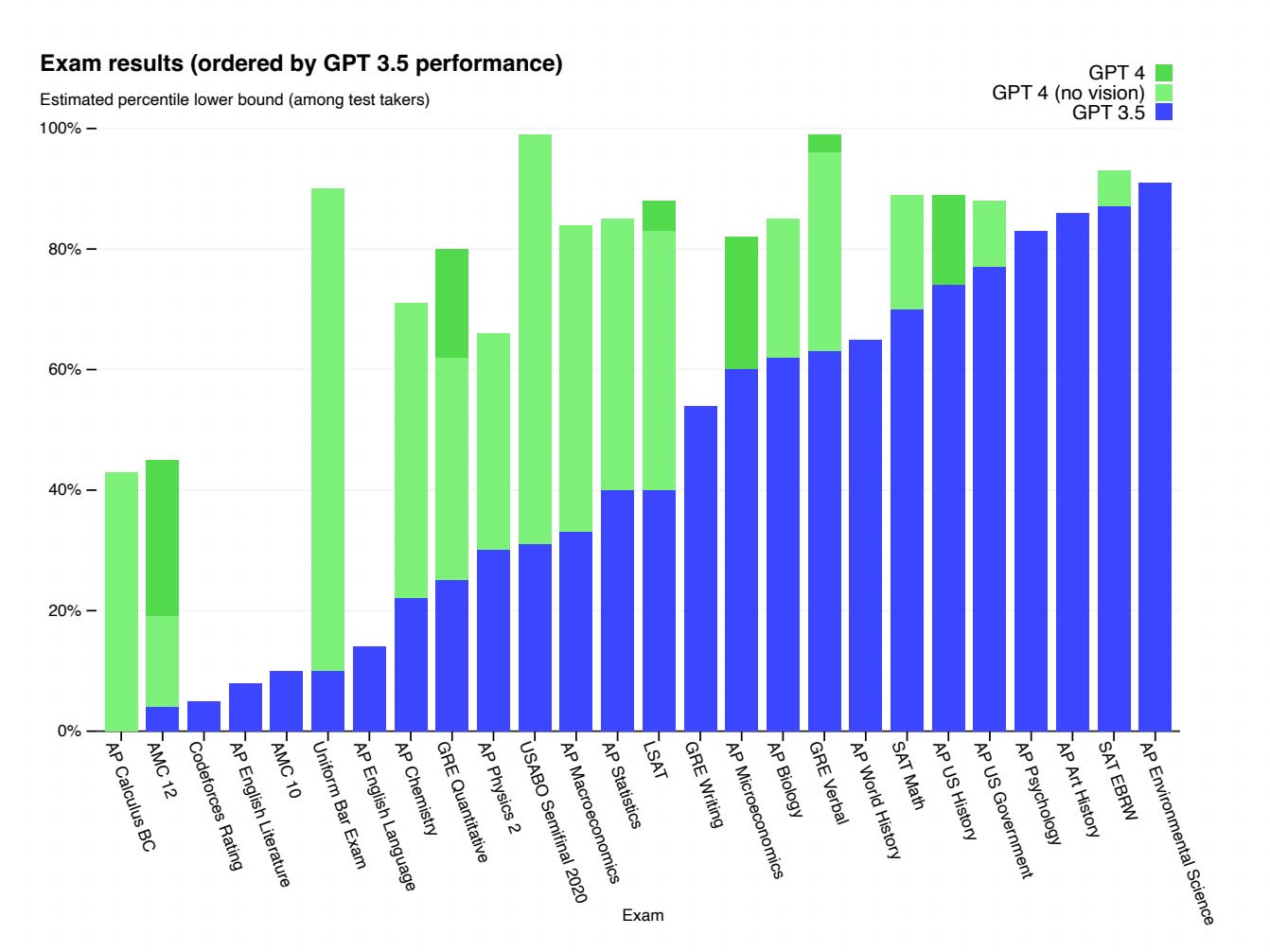

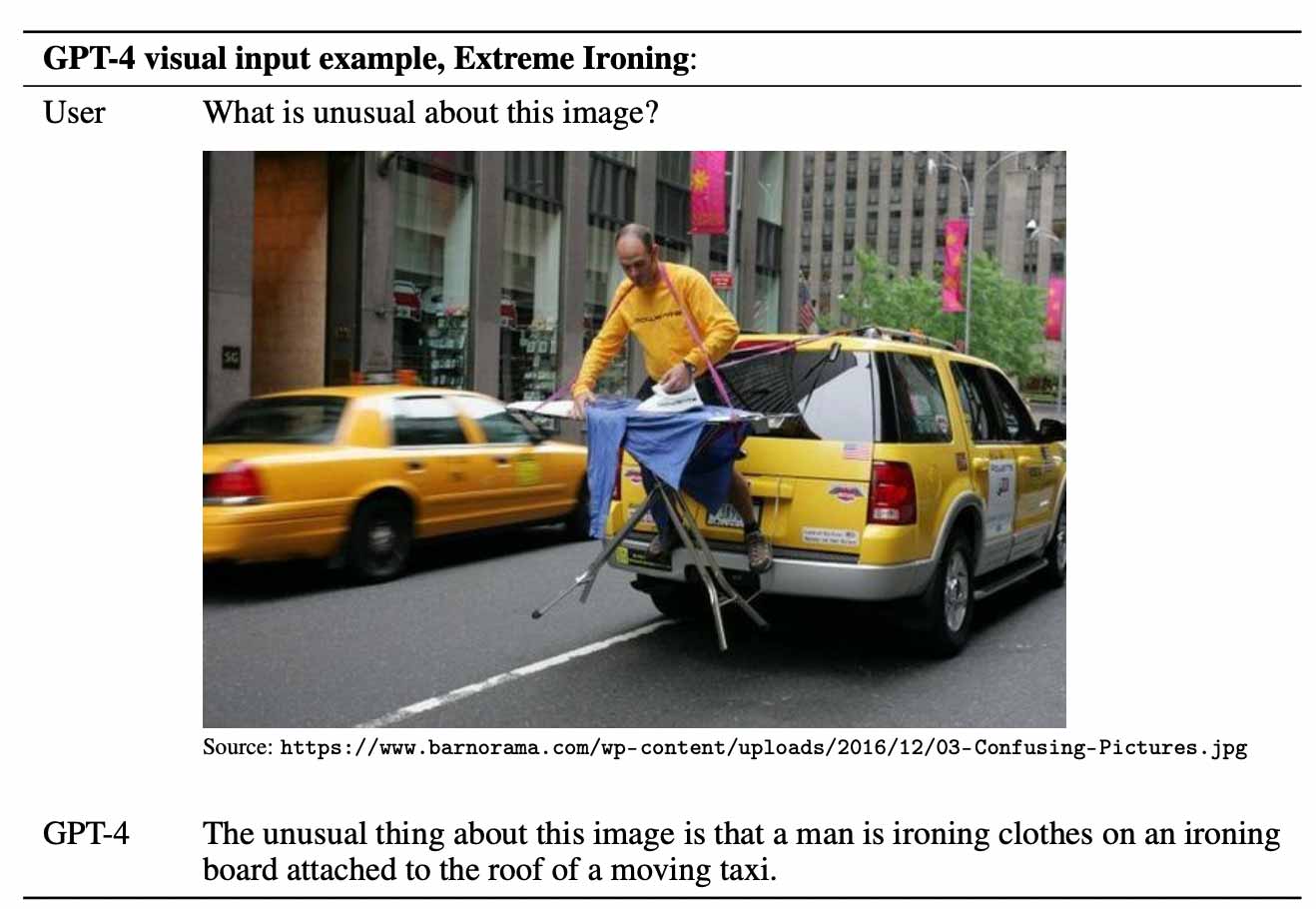

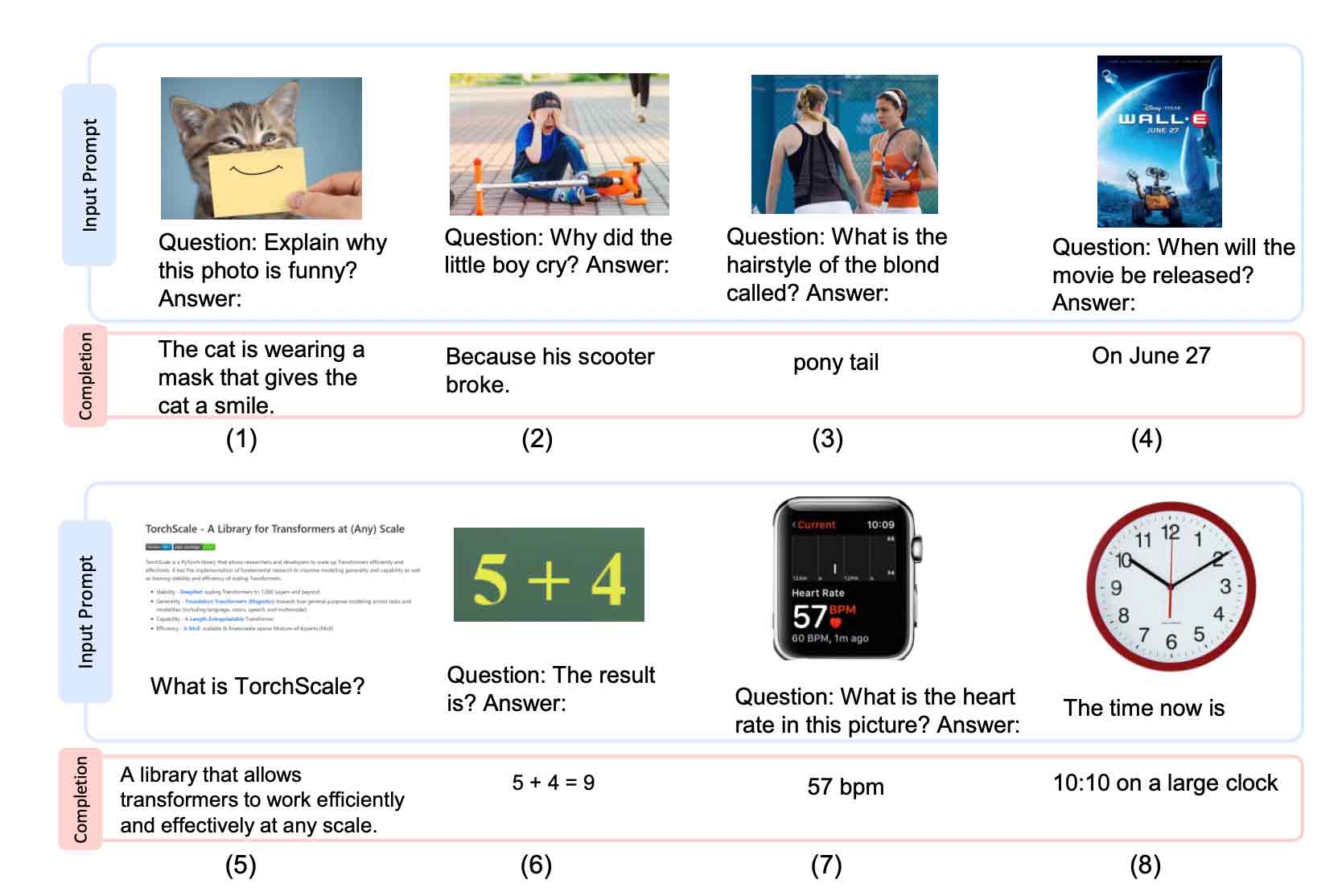

On March 14 OpenAI released GPT-4, a multi-modal large language model that can accept both images and text as input. Learn how GPT-4 works and what it means in this technical blog.John HughesAccuracy Team Lead

Lawrence AtkinsMachine Learning Engineer

| Footnotes | * The GPT-4 technical report contains no detail about "architecture (including model size), hardware, training compute, dataset construction, [or] training method" due to the "competitive landscape and the safety implications" of large LMs. ** A token can be either a word or sub-word, generated using a byte-pair encoding (BPE). BPE creates a smaller vocabulary by breaking down words into smaller sub-word units. It iteratively merges the most frequent pair of consecutive bytes in a corpus of text, gradually reducing the number of unique byte sequences until a desired vocabulary size is reached. |

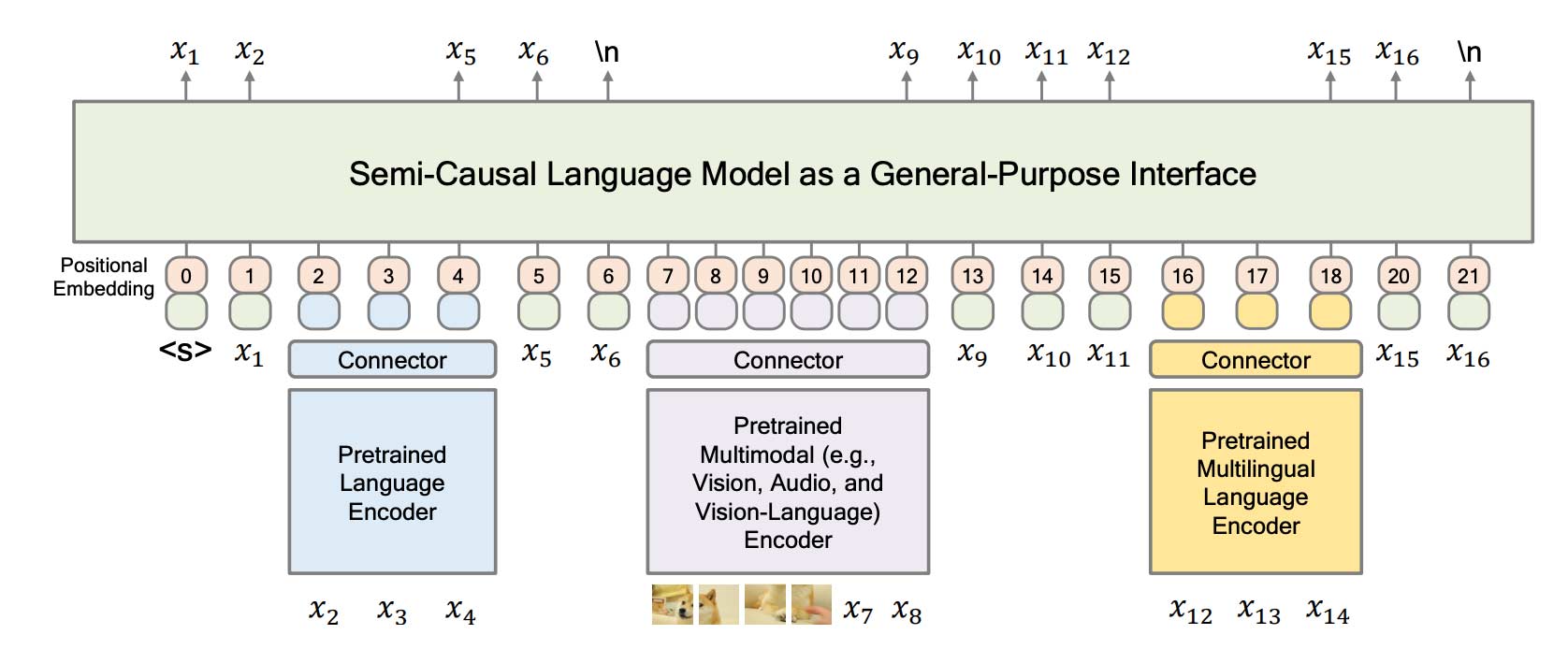

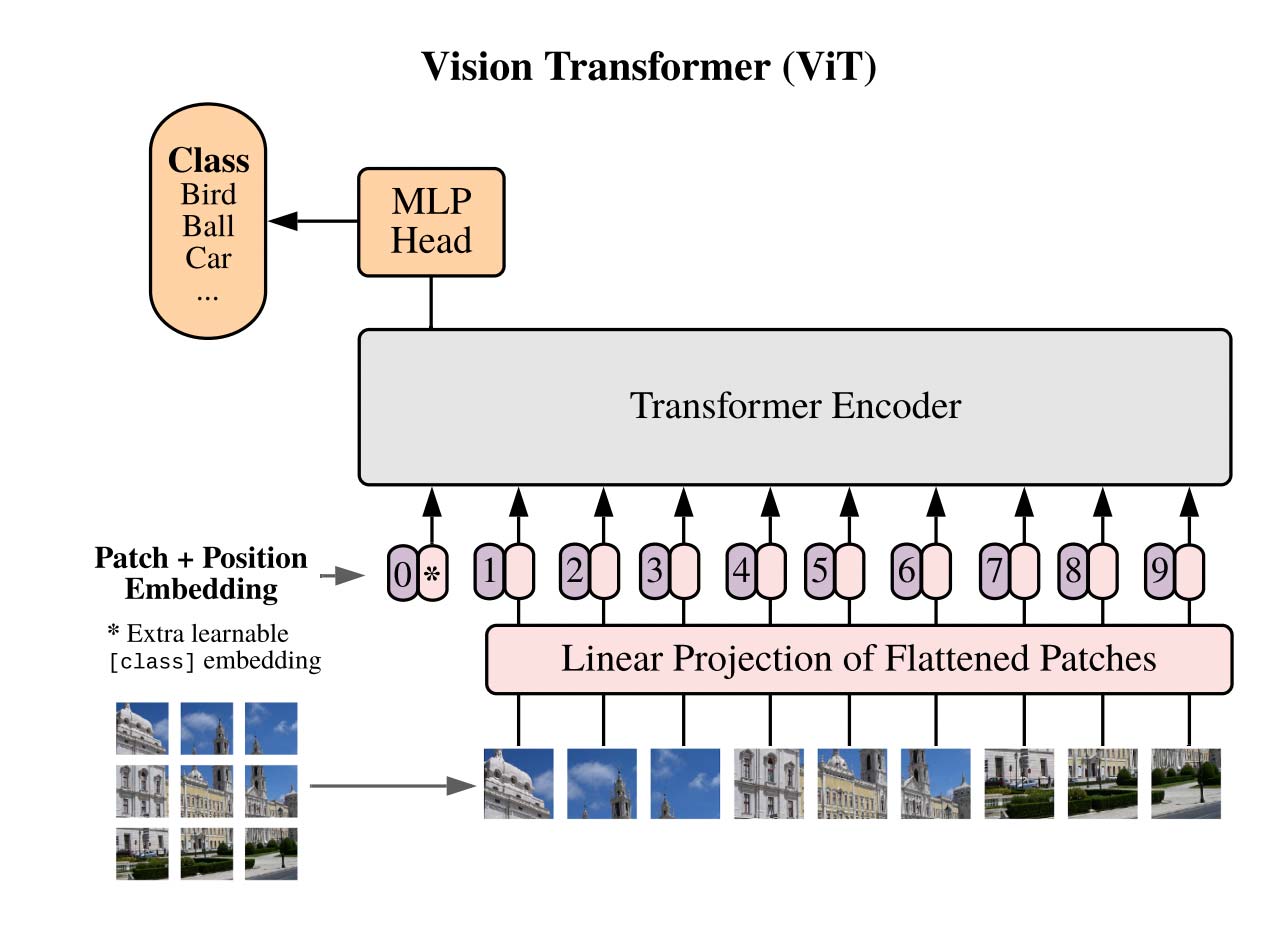

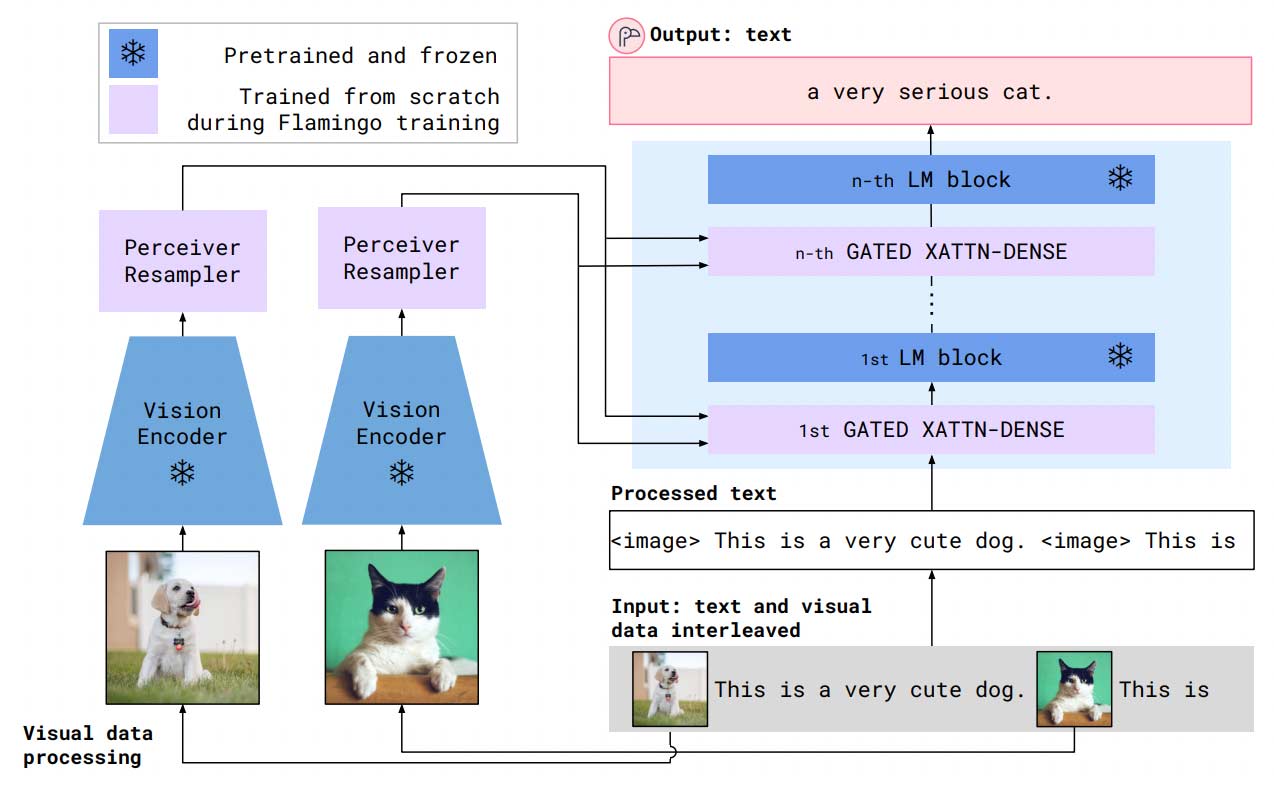

| References | [1] Brown, Tom, et al. "Language models are few-shot learners." Advances in neural information processing systems 33 (2020): 1877-1901. [2] Huang, Shaohan, et al. "Language Is Not All You Need: Aligning Perception with Language Models." arXiv preprint arXiv:2302.14045 (2023). [3] Alayrac, Jean-Baptiste, et al. "Flamingo: a visual language model for few-shot learning." arXiv preprint arXiv:2204.14198 (2022). [4] Ramesh, Aditya, et al. "Hierarchical text-conditional image generation with clip latents." arXiv preprint arXiv:2204.06125 (2022). [5] Radford, Alec, et al. "Robust speech recognition via large-scale weak supervision." arXiv preprint arXiv:2212.04356 (2022). [6] Vaswani, Ashish, et al. "Attention is all you need." Advances in neural information processing systems 30 (2017). [7] Hao, Yaru, et al. "Language models are general-purpose interfaces." arXiv preprint arXiv:2206.06336 (2022). [8] Dosovitskiy, Alexey, et al. "An image is worth 16x16 words: Transformers for image recognition at scale." arXiv preprint arXiv:2010.11929 (2020). [9] Radford, Alec, et al. "Learning transferable visual models from natural language supervision." International conference on machine learning. PMLR, 2021. [10] Ouyang, Long, et al. "Training language models to follow instructions with human feedback." arXiv preprint arXiv:2203.02155 (2022). [11] Bai, Yuntao, et al. "Constitutional AI: Harmlessness from AI Feedback." arXiv preprint arXiv:2212.08073 (2022). [12] OpenAI. "GPT-4 Technical Report", OpenAI (2023) [13] Wei, Jason, et al. "Chain of thought prompting elicits reasoning in large language models." arXiv preprint arXiv:2201.11903 (2022). [14] Dhariwal, Prafulla, et al. "Jukebox: A generative model for music." arXiv preprint arXiv:2005.00341 (2020). [15] Sun, Chen, et al. "Videobert: A joint model for video and language representation learning." Proceedings of the IEEE/CVF international conference on computer vision. 2019. [16] Borsos, Zalán, et al. "Audiolm: a language modeling approach to audio generation." arXiv preprint arXiv:2209.03143 (2022). |

| Author | John Hughes & Lawrence Atkins |

| Acknowledgements | Edward Rees, Ellena Reid, Liam Steadman, Markus Hennerbichler |

Related Articles

- TechnicalOct 12, 2022

Whisper Speech to Text Deep-Dive

Will WilliamsChief Technology OfficerLawrence AtkinsMachine Learning Engineer - TechnicalOct 25, 2022

The Future of Word Error Rate (WER)

John HughesAccuracy Team Lead - TechnicalMar 9, 2023

Achieving Accessibility Through Incredible Accuracy with Ursa

Benedetta CevoliSenior Machine Learning Engineer