- Blog

- Foundational speech technology for the AI era - Sp...

Oct 17, 2023 | Read time 11 min

Foundational speech technology for the AI era - Speech Intelligence is here

A New Chapter for AI and Understanding Every Voice

AI Dominance: LLMs, Generative AI, and the Speech Revolution

We are entering a new era of AI, and Large Language Models and Generative AI have hit the mainstream.

OpenAI’s ChatGPT grew to 100 million monthly active users in January, making it the fastest-growing consumer application in history. Since then, all Big Tech companies have launched their own LLM-powered services, such as Bard from Google. GitHub CoPilot, an LLM assistant for developers, reported a 32% productivity gain by analyzing data from almost a million users. Corporate VCs cut investments into non-AI companies by 50% but pumped $22bn into AI investments. Generative AI investment reached $18bn in 2023, up from $3.9bn in 2022. For comparison $950m in total was invested between 2010-2018.

Speech technology has also been benefiting from this AI revolution. We've seen deep learning approaches continue to improve speech-to-text models, using the same underlying Transformer architecture that has been powering LLM breakthroughs. Hyperscalers have been continuing their research into speech models, focused on an academic end-to-end approach, such as Meta's MMS project and Google's Chirp Universal speech model.

OpenAI also released Whisper, an open-source speech-to-text recognition model, further demonstrating the increased focus on speech as a core part of an AI research agenda. Due to OpenAI’s brand, this launch created plenty of press coverage and put speech into the limelight.

For us, this represented a huge opportunity. Competition is not something to be afraid of, especially since it raises the profile of the industry. As Whisper was being launched, we'd already been working on our Ursa system, which demonstrated 25% relative accuracy gains against Whisper. This was followed in quick succession by new features including Translation, Summarization, Sentiment Analysis, Topic Detection, with many more on the way.

We're now at an inflection point when it comes to speech technology and being able to apply cutting-edge AI technology to speech data to help understand every voice, and ultimately create incredible products that use speech data.

That's why we're excited to tell you about Speech Intelligence.

A new era for speech.

The dawn of the AI era

We are now well and truly in the dawn of the AI era – when built with people in mind, this emergent technology is going to be ubiquitous and transform our lives for the better. We are still only scratching the surface of this technology and what it can offer the world. LLMs have hugely accelerated the awareness and adoption of AI. However, we believe that most have vastly underestimated the impact LLMs could have for leveraging our most basic form of communication – speech.

Verbal communication is our default and our most natural way of conveying thoughts and ideas. It might seem obvious that most of us use a mouse and keyboard, and touch to use technology, but we must learn skills to be able to use computers and software. They remain in almost all instances, unintuitive. Put a toddler in front of a laptop, and they won't design and launch a website for you.

Even when technology can interact with our voices, the technology has large limitations. Almost everyone has a story of a virtual personal assistant or car or smart home hub not understanding their name, accent, or intention. This not only leads to frustration but a sense of being ignored and left behind by technology.

LLMs like GPT, PaLM, and Llama have made the headlines by being trained on the written word, but as a species, we've been talking for 100,000 years longer than we've been writing and continue to say seven times more words each day than we write. It seems strange that almost all the technology that we use today is unable to be interacted with using the very thing that comes most naturally to us.

Why then has speech lagged other AI breakthroughs? There are two main reasons – how challenging it is to accurately transcribe all human voices in the real world, and once it is transcribed, the difficulty of comprehending the resulting unstructured messy data.

Understanding every voice is hard

To successfully make use of the spoken word, it needs to be captured accurately. Any additional uses must be based on an accurate source of truth. If 'garbage in, garbage out', is true, then the reverse is too.

To maximize the use of the spoken word, this technology will need to understand everyone. And when I say that I mean everyone, regardless of demographic, age, gender, accent, dialect, or location.

Inclusive technology can't be built if it only understands American English.

If you've spent any time evaluating software companies in the speech-to-text or ASR space, you will have seen various charts that depict Word Error Rate or Accuracy. These are broadly useful and if we look at these data points, we can see that we've now reached a point where automatic transcription often exceeds human-level ability, and accurately transcribes audio at a rate (and cost) that simply hasn't been possible before.

These figures do however often mask the realities of transcribing audio. The data used to show these test results is often of high quality and is also done by giving speakers a script of words to recite directly into a microphone. Accurately transcribing this kind of input is, relatively speaking, easy when compared to the low-quality, noisy, real-world audio of people expressing thoughts and opinions in real-time. Often these datasets don't reflect the multitude of different accents and dialects that exist in the world.

If these charts displaying a 95% accuracy rating have made you think that accurate transcription of human speech is a solved problem, think again.

This will always be central to our mission here at Speechmatics, and we're committed to regularly updating our models to improve our accuracy across languages and dialects, to ensure that everyone in the world can be understood.

Creating active value with voice data has been transformed with LLMs

A big shift in our thinking has come from not seeing transcription as an endpoint but as the first and most fundamental stage of gaining insights and driving interesting and valuable downstream activities with the speech data once it has been accurately transcribed. This too has been hard historically.

Natural Language Processing (NLP) as a field of AI has been making progress for many years but has always struggled to generalize across unstructured, messy data.

The thoughts and opinions of thousands of customers during thousands of hours of audio is harder to make sense of than thousands of rows of neatly arranged numbers. It's hard enough for people to read and make sense of transcripts as we don't write like we speak. There isn't an Excel for qualitative data after all. But this is changing, and quickly.

This is the main reason that LLMs have caught the headlines. They seem to be able to understand the messy, real-world data contained within vast quantities of words in a way that we've never experienced before. We can ask these chatbots questions about the weather, historical events, get them to write poetry or jokes, and even give us advice and help in our work. They can generalize in a way no previous NLP algorithms were able to achieve.

This is an inflection point because the use cases for this technology are extremely broad. There isn't one thing you can do, there are dozens, thousands, millions.

So, what happens when you combine highly accurate transcription of any human voice with the power of LLMs and AI?

Something big.

Speech Intelligence is here.

Harnessing technology to make the most of speech is what we call Speech Intelligence.

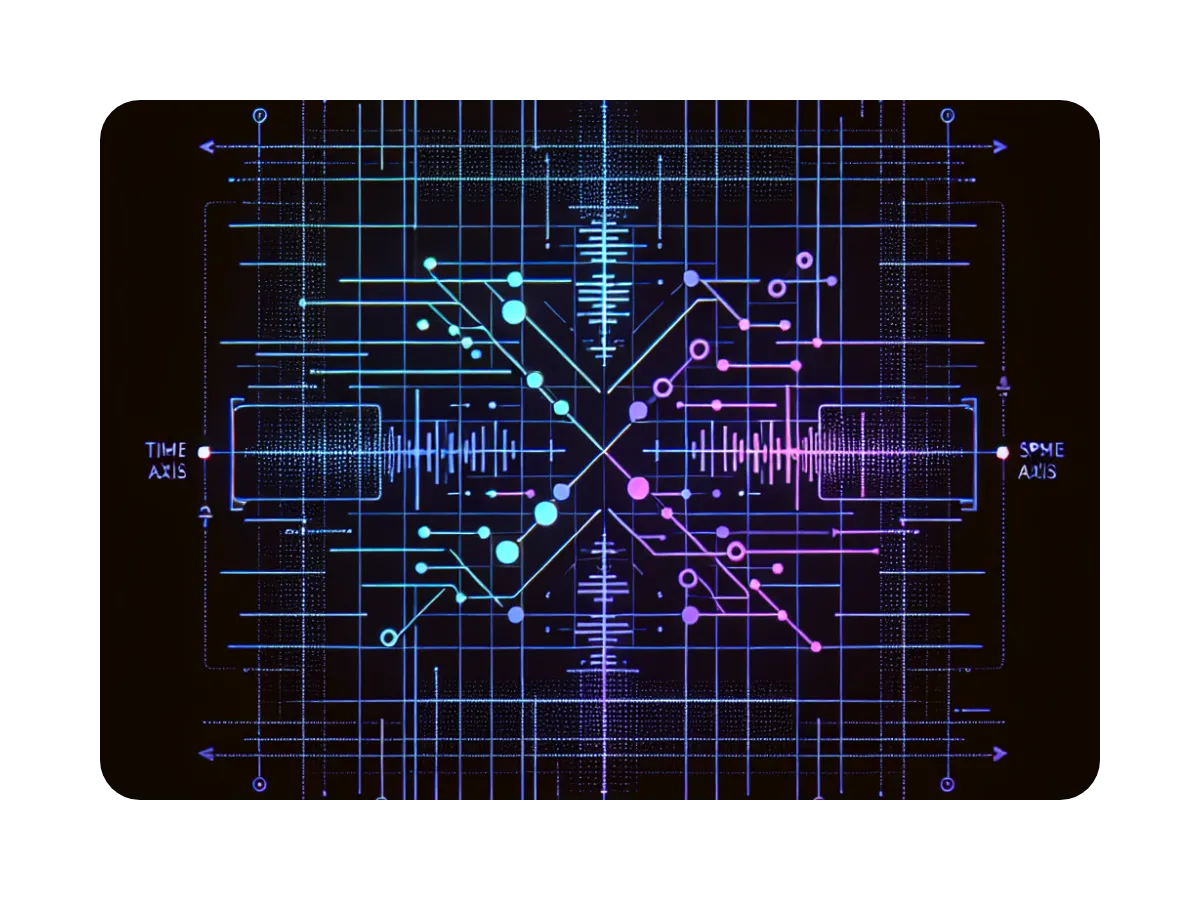

Speech Intelligence is the integration of speech comprehension with the generalization abilities of breakthroughs in other fields of AI. It moves us from passively creating to text from speech, to actively taking action and creating value directly from our voices.

This is more than merely 'speech understanding' or 'audio intelligence'. We are committed to using new advancements in technology to find value in speech, wherever that may be. Ours is a holistic view of taking audio from its source, and then transcribing, understanding, comprehending, transforming and using it to build great products, across industries. It makes speech a seamless part of the new AI stack.

Our commitment to understanding every voice remains, and we now can do far more with this than was possible in years past. We're viewing conversations and the spoken word as a vast untapped resource, only now being able to be mined, transformed, understood, and used as fuel for companies to grow and succeed.

In the last few months, we've added translation, summarization, sentiment analysis, topic detection and more, all built on top of industry-leading levels of accuracy (even in difficult real-world environments). This represents a move towards seeing transcription as the first, seamless step in a chain, not the destination.

It's helpful to think of Speech Intelligence in terms of three complementary technology categories:

1) Accurate, intuitable transcription in any language (speech-to-text)

These are the foundations upon which everything else rests; if the foundations are not solid, everything else is deeply compromised. The higher the accuracy we can achieve in our languages, the more reliable and useful any downstream processes built using those transcripts will be. To build great products with the outputs, you must trust the inputs. This means that we will continue to improve our ASR, adding more languages, always ensuring that we retain high accuracy across all dialects and dialects, and continue to ensure we mitigate bias in our training and text data. In the world of Speech Intelligence, consistent accuracy across all languages, dialects and accents is more important than ever.

2) Speech capabilities built using transcribed audio, which can be applied to one or more transcripts

If step 1 is all about recording speech data, then this step asks 'now, what can we do with this to make it more useful?'. Speech capabilities involve transforming transcripts, be that via translation, or capabilities like summarization, sentiment analysis, topic detection, and anything that looks to either understand or interpret the transcript. This makes the potentially overwhelming volume of now-written words easier to parse and comprehend. We're only skimming the surface with these but have already used these to provide digestible summaries of lengthy meetings, or provide detailed sentiment analysis of multiple customer calls, or parse a long podcast and provide a list of topics spoken about along with links to the original audio. We're excited about further breakthroughs and adding more capabilities to our roster.

3) Solutions built as bundles of capabilities for customers

Something particularly exciting is that these components are extremely modular and can be applied to an almost infinite number of industries and use cases. If these are the ingredients, the number of recipes is vast, and will only grow over time.

Many of these have already been built and used by our customers across different industries, but there are many more yet to be built. We will be working alongside our customers to find new 'bundles' of capabilities and applying them to real business challenges, to provide tangible value for them and their customers in turn.

These represent something truly different to the old world of ASR.

The world of Speech Intelligence is full of opportunities to build innovative new products and features, the likes of which we’ve never seen before.

Speech Intelligence represents the opportunity for speech to become one of the largest drivers of value within companies and teams.

The use cases for this technology are vast, with a broad range of applications already being utilized today:

Real-time captioning of any live event, in any language, regardless of the language being spoken by those on screen.

Educational videos and online courses (even ones on technical subject matters) are effortlessly transcribed and translated, with summaries of every lesson sent out to students across the world.

Virtual sales assistants that use the live conversation to pull supporting marketing collateral and information, presenting them to the salesperson in real-time, without the need to find them on their computer.

Global Media Monitoring across any language, providing real-time alerts and summaries of the context in which, a brand name or term was used.

In the future, the list of products that can seamlessly leverage speech will be very, very long indeed.

An exciting new chapter for us and our customers

Speech Intelligence represents both a statement of what we do and a north star.

We know that speech has the potential to become a foundational building block of the technology stack for the AI era.

We know that there are technological breakthroughs ahead of us that will allow our customers to use speech data in ways we can't even imagine yet, and we're committed to finding those and bringing them to market.

I’d like to finish with a quick story on those rare occasions where you get a visceral reminder of why your mission is so important.

We recently sponsored and provided the captions for Interspeech 2023, a conference on the science and technology of spoken language processing. The conference took place in Dublin, and we provided real-time captions for every talk, displayed on large screens in the hall.

Though the language of the conference was English, the speakers, and audience were international, with many having to grapple with complicated technological and academic concepts in either their second, or third language. In one talk, the speaker (who had done the presentation in their second language, English) was asked a question by an audience member whose first language also was not English. During this exchange, there was a moment where both the speaker and audience member turned to the big screens to use Speechmatics’ real-time captions to help understand both the question being asked and the answer given. The screen became their source of truth; the window into what the other was conveying.

In other words, they were using our technology to understand each other.

This is what it means to create technology that increases inclusivity and collaboration.

This, for me, is why we do this.

This is why Speech Intelligence is important.

We’d love for you to join us for the journey...

Related Articles

- ProductOct 17, 2023

Actions, not words. Making speech technology useful.

Trevor BackChief Product Officer - TechnicalOct 17, 2023

Building Speech Intelligence on Solid Foundations

Will WilliamsChief Technology Officer

Latest Articles

![[alt: Bilingual medical model featuring terms related to various health conditions and medications in Arabic and English. Key terms include "Chronic kidney disease," "Heart attack," "Diabetes," and "Insulin," among others, displayed in an organized layout.]](/_next/image?url=https%3A%2F%2Fimages.ctfassets.net%2Fyze1aysi0225%2F3I31FQHBheddd0CibURFBv%2F4355036ed3d14b4e1accb3fe39ecd886%2FArabic-English-blog-Jade-wide-carousel.webp&w=3840&q=75)

Speechmatics achieves a world first in bilingual Voice AI with new Arabic–English model

Sets a new accuracy bar for real-world code-switching: 35% fewer errors than the closest competitor.

![[alt: Illuminated ancient mud-brick structures stand against a dusk sky, showcasing architectural details and textures. Palm trees are in the foreground, adding to the setting's ambiance. Visually captures a historic site in twilight.]](/_next/image?url=https%3A%2F%2Fimages.ctfassets.net%2Fyze1aysi0225%2F2qdoWdIOsIygVY0cwl8UD4%2Fe7725d963a96f84c87d614ccc6cce3c6%2FAdobeStock_669627191-wide-carousel.webp&w=3840&q=75)

Your voice agent speaks perfect Arabic. That's the problem.

Most voice AI models are trained on formal Arabic, but real conversations across the Middle East mix dialects and English in ways those systems aren’t built to handle.

How Nvidia Dominates the HuggingFace Leaderboards in This Key Metric

Why predicting durations as well as tokens allows transducer models to skip frames and achieve up to 2.82X faster inference.

![[alt: Healthcare professionals in scrubs and lab coats walk briskly down a hospital corridor. A nurse uses a tablet while others carry patient charts and attend to a gurney. The setting conveys a busy, clinical environment focused on patient care.]](/_next/image?url=https%3A%2F%2Fimages.ctfassets.net%2Fyze1aysi0225%2F3TUGqo1FcOmT91WhT3fgbo%2F9a07c229c11f8cbe62e6e40a1f8682c7%2FImage_fx__8__1-wide-carousel.webp&w=3840&q=75)

Why AI-native EHR platforms will treat speech as core infrastructure in 2026

As clinical workflows become automated and AI-driven, real-time speech is shifting from a transcription feature to the foundational intelligence layer inside modern EHR systems.

![[alt: Logos of Speechmatics and Edvak are displayed side by side, interconnected by a stylized x symbol. The background features soft, wavy lines in light blue, creating a modern and tech-focused aesthetic.]](/_next/image?url=https%3A%2F%2Fimages.ctfassets.net%2Fyze1aysi0225%2F7LI5VH9yspI5pKWFeiZBXC%2F92f6a47a06ab6a97fb7f5a953b998737%2FCyan-wide-carousel.webp&w=3840&q=75)

One word changes everything: Speechmatics and Edvak EHR partner to make voice AI safe for clinical automation at scale

Turning real-time clinical speech into trusted, EHR-native automation.

![[alt: Concentric circles radiate outward from a central orange icon with a white Speechmatics logo. The background is dark blue, enhancing the orange glow. A thin green line runs horizontally across the lower part of the image.]](/_next/image?url=https%3A%2F%2Fimages.ctfassets.net%2Fyze1aysi0225%2F4jGjYveRLo3sKjzBzMIXXa%2F11e90a40df418658e9c15cb1ecff4e4b%2FBlog_image-wide-carousel.webp&w=3840&q=75)

Speed you can trust: The STT metrics that matter for voice agents

What “fast” actually means for voice agents — and why Pipecat’s TTFS + semantic accuracy is the clearest benchmark we’ve seen.