- Blog

- Closed Captioning vs Open Captioning in Media Dist...

Jul 20, 2023 | Read time 6 min

Closed Captioning vs Open Captioning in Media Distribution

Unveiling the Differences: Closed Captioning vs Open Captioning in Media Distribution and the Transformative Potential of Speech-to-Text Technology.

Before we get into the topic of discussion of closed captioning vs open captioning, let's first clear up some definitions. Captioning is also sometimes referred to as subtitling, though they both serve unique purposes and have specific traits. Subtitles assume viewers can hear the audio and are typically used when the viewer doesn’t speak the language in the video. This is unlike captions, which are primarily used to help viewers who cannot hear the video audio.

Captions can be open or closed, and are textual representations of the spoken dialogue, sound effects, and other audio elements in media. Closed captions are much more common, and offered in videos. However, far fewer people know about open captions and when they can be used.

Closed Captioning vs Open Captioning

So, what's the difference between close captioning and open captioning? In short, open captions are permanently displayed on the screen and cannot be turned off, while closed captions are optional and can be enabled or disabled by the viewer. Closed captions provide more customization options and flexibility, while open captions ensure universal accessibility.

Open captioning, also known as burnt-in or hard-coded captions, involves permanently embedding captions directly onto media content. Therefore these captions are permanently visible, and cannot be turned off. This ensures that the captions are always available to viewers without the need to know how to enable them (or not!).

In a world where 80% of U.S. consumers are more likely to watch an entire video when captions are provided, you can see why captioning is so important.

Open captions are especially beneficial for those with hearing impairments, as they ensure universal accessibility. They provide a seamless viewing experience for people with hearing loss and those who rely on captions to understand the dialogue and audio cues.

One key advantage of open captioning is convenience. Viewers do not need to search for or enable captions manually. This makes open captions suitable for various viewing environments such as public spaces, where enabling captions may not be feasible or accessible to all viewers.

However, it’s important to note that open captioning may not be suitable for all scenarios. The visible nature of open captions can impact the visual aesthetics of the content, especially in cases where preserving the original presentation is crucial. Some viewers may find open captions distracting or prefer a cleaner viewing experience without permanently visible text on screen. Cue closed captioning...

Closed captioning allows viewers to enable or disable captions based on their preference. These captions are stored separately from the media content and can be accessed through a dedicated menu or by pressing a specific button on the viewing platform.

One key advantage of closed captioning is its customization. Viewers can adjust the appearance of the captions to suit their preferences. They can typically modify aspects such as font size, color, and positioning on the screen.

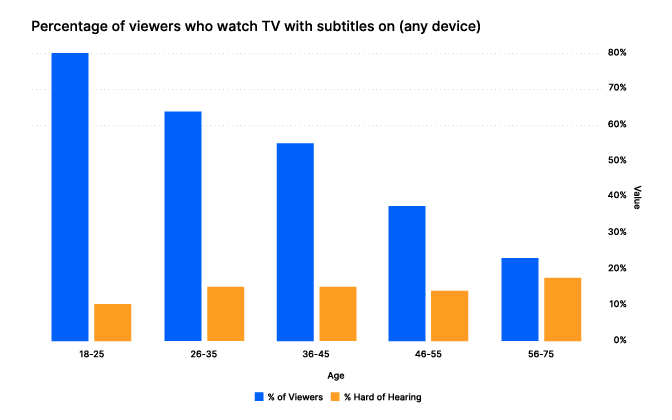

This appears especially popular with people between the ages of 18-25, with 80% preferring video with subtitles:

(Source: Kapwing - Younger views, age 18-25, greatly preferred video subtitles even though fewer of these viewers have hearing issues.)

In media distribution, closed captioning has gained significant adoption and, in some cases, regulatory requirements. Broadcasters, streaming platforms, and content creators are legally obliged to provide closed captions for their content to ensure equal access to information and entertainment.

Tom Wootton, Head of Product at Red Bee Media, discussed how Red Bee Media seamlessly delivers closed captions for streaming services and the broadcast television industry.

What are the Benefits that Speech-to-Text Technology Can Provide for Captioning?

Online platforms and broadcasters can leverage automatic speech recognition (ASR) systems to generate captions automatically, reducing the need for manual transcription. Here are the six key benefits that speech-to-text technology can provide for captioning:

By automatically converting spoken words into written text, eliminating the need for manual transcription, and significantly reducing the time and effort required to generate accurate captions, huge efficiency gains can be made.

Enabling the rapid and automated generation of captions for large volumes of content, allows for cost-effective captions that can be applied to numerous videos and broadcasts.

This allows for the immediate display of captions during live events or broadcasts, enhancing accessibility for individuals who rely on captions in real-time scenarios.

Reducing the need for manual labor, and significantly lowering the overall costs associated with producing accurate and timely captions.

Captions ensure that individuals with hearing impairments can access and engage with videos, broadcasts, and other audio-visual content, promoting equal participation and inclusion for all.

Allowing for the creation of captions that cater to diverse global audiences and break down language barriers for enhanced accessibility and understanding.

Using Speech-to-Text Technology to Improve Captioning

Speech-to-text technology has revolutionized captioning, making the process much more cost-effective and efficient. Traditionally, captions were created manually by trained captionists who transcribed the dialogue and synchronized it with the media content. However, advancements in ASR technology have significantly streamlined this process.

Speech-to-text technology has made closed captioning more accessible and scalable. Online platforms and broadcasters can leverage ASR systems to generate captions for their content automatically. This automation allows for faster captioning turnaround times, making it possible to provide captions for a vast amount of media content. Additionally, ASR technology enables real-time captioning for live broadcasts, bringing accessibility to live events such as news broadcasts, sports, and conferences.

Real-time captioning for live events has become more accessible and accurate, thanks to speech-to-text technology. ASR systems can transcribe spoken words almost instantaneously, allowing for real-time captioning alongside live audio content. This feature ensures that individuals with hearing impairments can actively engage with and understand live events.

Automated captioning reduces the dependency on manual labor, resulting in significant cost savings for media platforms and content creators. This cost-effectiveness allows for the captioning of a broader range of content, enabling platforms to make their entire libraries of audio-visual material accessible to all - be it internal teams or people with hearing impairments.

Common Challenges of Using ASR in Captioning

Using ASR in captioning faces common challenges. One key challenge is the accuracy of transcriptions, as ASR systems can struggle with complex vocabulary, accents, background noise, and overlapping speech, resulting in errors and inaccuracies in the generated captions. Additionally, identifying individual speakers can be problematic, particularly in situations with multiple participants or rapid speaker switches.

Other challenges include capturing punctuation, sentence structure, and formatting accurately, which can affect the readability and comprehension of the captions. Real-time performance may suffer from latency issues, causing delays in generating captions that align with the spoken words. Language and domain adaptation can be difficult, as ASR models may struggle to accurately transcribe speech in different languages or specialized fields. Finally, ASR systems may have limitations in understanding context, nuances, and sarcasm, potentially leading to misinterpretations or incorrect captions.

Addressing these challenges involves ongoing improvements in ASR technology, such as fine-tuning models, integrating contextual information, and manual editing or reviewing captions to ensure accuracy and quality.

However, with ongoing advancements in speech recognition algorithms and machine learning techniques, the accuracy of ASR systems continues to improve.

Revolutionizing Captioning in Media Distribution

The advancements in speech-to-text technology have revolutionized captioning in media distribution, making it more efficient, scalable, cost-effective, and inclusive. By leveraging ASR systems, media platforms and content creators can provide universal access to audio-visual content, empowering all individuals to fully engage with and enjoy the rich multimedia experiences offered in today's digital age.