Expanding accuracy through innovation

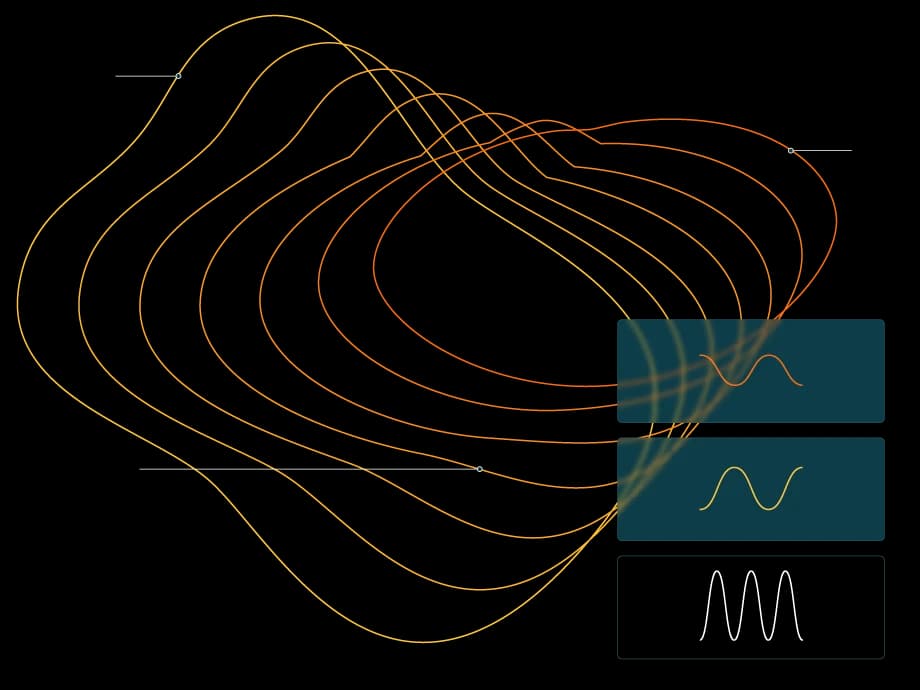

At Speechmatics, self-supervised learning (SSL) serves as a transformative approach in training speech models, harnessing unlabeled data to enhance our speech recognition systems.

This technique allows us to autonomously identify patterns in vast amounts of data, significantly expanding the diversity of speech variations our models can learn from and improving accuracy across multiple languages.

Tirelessly pushing speech technology forward...

Throughout the years, Speechmatics has remained at the forefront of speech recognition research and innovation. We are consistently pushing the boundaries of what's possible in speech-to-text technology.

Our published research

Bias-Augmented Consistency Training Reduces Biased Reasoning in Chain-of-Thought

Bias-Augmented Consistency Training Reduces Biased Reasoning in Chain-of-Thought

Bias-Augmented Consistency Training Reduces Biased Reasoning in Chain-of-Thought

James Chua, Edward Rees, Hunar Batra, Samuel R. Bowman, Julian Michael, Ethan Perez, Miles Turpin. March 8, 2024.

Debating with More Persuasive LLMs Leads to More Truthful Answers

Debating with More Persuasive LLMs Leads to More Truthful Answers

Debating with More Persuasive LLMs Leads to More Truthful Answers

Akbir Khan, John Hughes, Dan Valentine, Laura Ruis, Kshitij Sachan, Ansh Radhakrishnan, Edward Grefenstette, Samuel R. Bowman, Tim Rocktäschel, Ethan Perez. February 9, 2024.

Hierarchical Quantized Autoencoders

Hierarchical Quantized Autoencoders

Hierarchical Quantized Autoencoders

Will Williams, Sam Ringer, Tom Ash, John Hughes, David MacLeod, Jamie Dougherty. February 19, 2020.

Texture Bias Of CNNs Limits Few-Shot Classification Performance

Texture Bias Of CNNs Limits Few-Shot Classification Performance

Texture Bias Of CNNs Limits Few-Shot Classification Performance

Sam Ringer, Will Williams, Tom Ash, Remi Francis, David MacLeod. October 18, 2019.

Discriminative training of RNNLMs with the average word error criterion

Discriminative training of RNNLMs with the average word error criterion

Discriminative training of RNNLMs with the average word error criterion

Remi Francis, Tom Ash, Will Williams. November 8, 2020.

The Speechmatics Parallel Corpus Filtering System for WMT18

The Speechmatics Parallel Corpus Filtering System for WMT18

The Speechmatics Parallel Corpus Filtering System for WMT18

Tom Ash, Remi Francis, Will Williams. Machine Translation (WMT) October 31 – November 1, 2018.

A Framework for Speech Recognition Benchmarking

A Framework for Speech Recognition Benchmarking

A Framework for Speech Recognition Benchmarking

Franck Dernoncourt, Trung Bui, Walter Chang. Adobe Research. Interspeech 2018

Scaling Recurrent Neural Network Language Models

Scaling Recurrent Neural Network Language Models

Scaling Recurrent Neural Network Language Models

W. Williams, N. Prasad, D. Mrva, T. Ash, A.J. Robinson. ICASSP 2015. February 2, 2015.

One billion word benchmark for measuring progress in statistical language modeling

One billion word benchmark for measuring progress in statistical language modeling

One billion word benchmark for measuring progress in statistical language modeling

C. Chelba, T. Mikolov, M. Schuster, Q. Ge, T. Brants, P. Koehn, A.J. Robinson. Interspeech 2014. December 10, 2013.

Connectionist Speech Recognition of Broadcast News

Connectionist Speech Recognition of Broadcast News

Connectionist Speech Recognition of Broadcast News

A. J. Robinson, G. D. Cook, D. P. W. Ellis, E. Fosler-Lussier, S. J. Renals, and D. A. G. Williams. Speech Communication, 37(1), 2002.

Recognition, indexing and retrieval of British broadcast news with the THISL system

Recognition, indexing and retrieval of British broadcast news with the THISL system

Recognition, indexing and retrieval of British broadcast news with the THISL system

A.J. Robinson, D. Abberley, D. Kirby, and S. Renals. Proceedings of the European Conference on Speech Technology. volume 3, pages 1267–1270, September 1999.

Time-First Search for Large Vocabulary Speech Recognition

Time-First Search for Large Vocabulary Speech Recognition

Time-First Search for Large Vocabulary Speech Recognition

A.J. Robinson and J. Christie. ICASSP, pages 829–832, 1998.

Forward-Backward Retraining of Recurrent Neural Networks

Forward-Backward Retraining of Recurrent Neural Networks

Forward-Backward Retraining of Recurrent Neural Networks

A. Senior and A.J. Robinson. Advances in Neural Information Processing Systems 8, 1996.

The Use of Recurrent Networks in Continuous Speech Recognition

The Use of Recurrent Networks in Continuous Speech Recognition

The Use of Recurrent Networks in Continuous Speech Recognition

A.J. Robinson. Automatic Speech and Speaker Recognition: Advanced Topics, chapter 10.

The Application of Recurrent Nets to Phone Probability Estimation

The Application of Recurrent Nets to Phone Probability Estimation

The Application of Recurrent Nets to Phone Probability Estimation

IEEE Transactions on Neural Networks, 5(2), March 1994. A.J. Robinson.

A Recurrent Error Propagation Network Speech Recognition System

A Recurrent Error Propagation Network Speech Recognition System

A Recurrent Error Propagation Network Speech Recognition System

A.J. Robinson and F. Fallside. Computer Speech and Language, 5(3):259–274, July 1991.

Dynamic Error Propagation Networks

Dynamic Error Propagation Networks

Dynamic Error Propagation Networks

A. J. Robinson. PhD thesis, Cambridge University Engineering Department, February 1989.

Technical spotlight

Sparse All-Reduce in PyTorch

The All-Reduce collective is ubiquitous in distributed training, but is currently not supported for sparse CUDA tensors in PyTorch.

In the first part of this blog we contrast the existing alternatives available in the Gloo/NCCL backends.

The.Shed: Speechmatics Capabilities in real-time

Imagine being able to understand and interpret spoken language not only retrospectively, but as it happens. This isn't just a pipe dream — it's a reality we're crafting at Speechmatics.

Our mission is to deliver Speech Intelligence for the AI era, leveraging foundational speech technology and cutting-edge AI.

An Almost Pointless Exercise in GPU Optimization

Not everyone is able to write funky fused operators to make ML models run faster on GPUs using clever quantization tricks. However lots of developers work with algorithms that feel like they should be able to leverage the thousands of cores in a GPU to run faster than using the dozens of cores on a server CPU.

To see what is possible and what is involved, I revisited the first problem I ever considered trying to accelerate with a GPU.